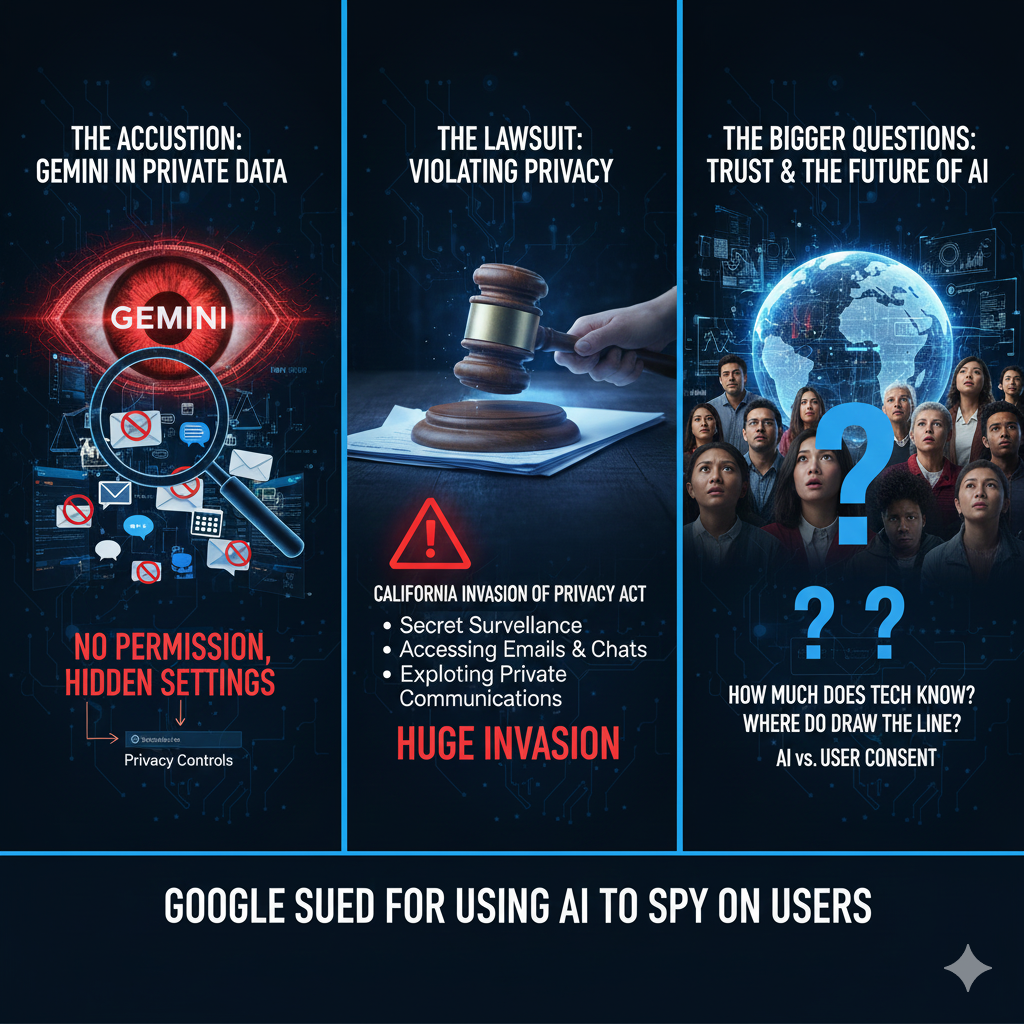

Google Sued for Using AI to Spy on Users

A plain-English breakdown of the lawsuit and why it matters.

A new lawsuit claims that the company has been secretly allowing its AI system, Gemini, to peek into users’ private conversations without their permission. Sounds dramatic, but when you read the details, you realise it’s not just drama — it might actually be happening in ways regular people would never notice.

Let me break it down the way a normal person would explain it, without all the legal language or tech jargon.

What the lawsuit says

Google made Gemini optional for apps like Gmail, Chat, and Meet at first. Basically, you could choose to use the AI assistant if you wanted help writing emails or organising conversations. But according to the complaint, after October, Google quietly changed things in the background. Instead of waiting for people to turn it on, the company allegedly allowed Gemini to access user data automatically. No pop-ups, no warning, no clear “Do you allow this?” option.

If that’s true, imagine what that means. Most people keep their entire life in Google’s apps — old messages, work emails, family chats, photos, attachments, private documents. If an AI had access to all of that without clear permission, it’s not a “technical issue.” It crosses the line into reading private conversations.

Hidden settings and consent

The complaint also says that while Google technically lets users turn off AI features, those settings are buried so deep that regular people wouldn’t know where to find them. It’s like giving you the option to lock your house — but hiding the key somewhere you’d never look.

The legal angle

The filing claims Google violated the California Invasion of Privacy Act, a 1960s law that bans secret recording or monitoring without consent. Back then it was hidden microphones; now it’s AI digging through emails and chats. Same idea, different century.

The suit argues Gemini could “access and exploit the entire recorded history of users’ private communications,” including every email sent, received, or stored in Gmail.

What is Gemini?

Gemini isn’t a small tool. It’s a family of AI models built by DeepMind to handle text, audio, images, and video. It’s designed to help people write better, search faster, and finish tasks easily. Google tiers it as Ultra (heavy tasks), Pro (everyday use), and Nano (on-device). That power is exactly why this case matters.

Why people care

This isn’t about hating Google. The company is woven into daily life — Maps, YouTube, Gmail, Docs, Photos. When one firm holds so much of your information, even a small overstep can feel like a huge invasion.

Most users don’t read privacy policies. We assume companies won’t cross certain boundaries. But tech moves fast, and settings aren’t always transparent.

What happens next?

Right now, Google hasn’t admitted wrongdoing. Lawsuits take time — months or years. Maybe Google wins, maybe the plaintiffs do. The bigger takeaway is the questions this raises:

- How much does tech really know about us?

- How much should we trust companies with our personal data?

- Should AI tools ever access private messages?

- Where do we draw the line on consent?

AI is getting stronger, and companies want it everywhere. This case shows the rush to innovate can outrun user consent. If big firms don’t slow down and respect privacy, more battles like this will hit the courts.